Week 40: Sprint 0

Block programming

Block programming has been divided into two parts. One part is responsible for building a Blockly site, and generating the Python2.7 code from blocks. Another part will develop an application that receives Python2.7 code from the Blockly site, and interprets and evaluates that code. This latter part will have the Pepper SDK to be able to communicate with Pepper.

So far, the Blockly group has looked at the test repository for Blockly, and tried some different blocks. There has been some issues generating Python2.7 code, which will need to be looked at in the coming sprint.

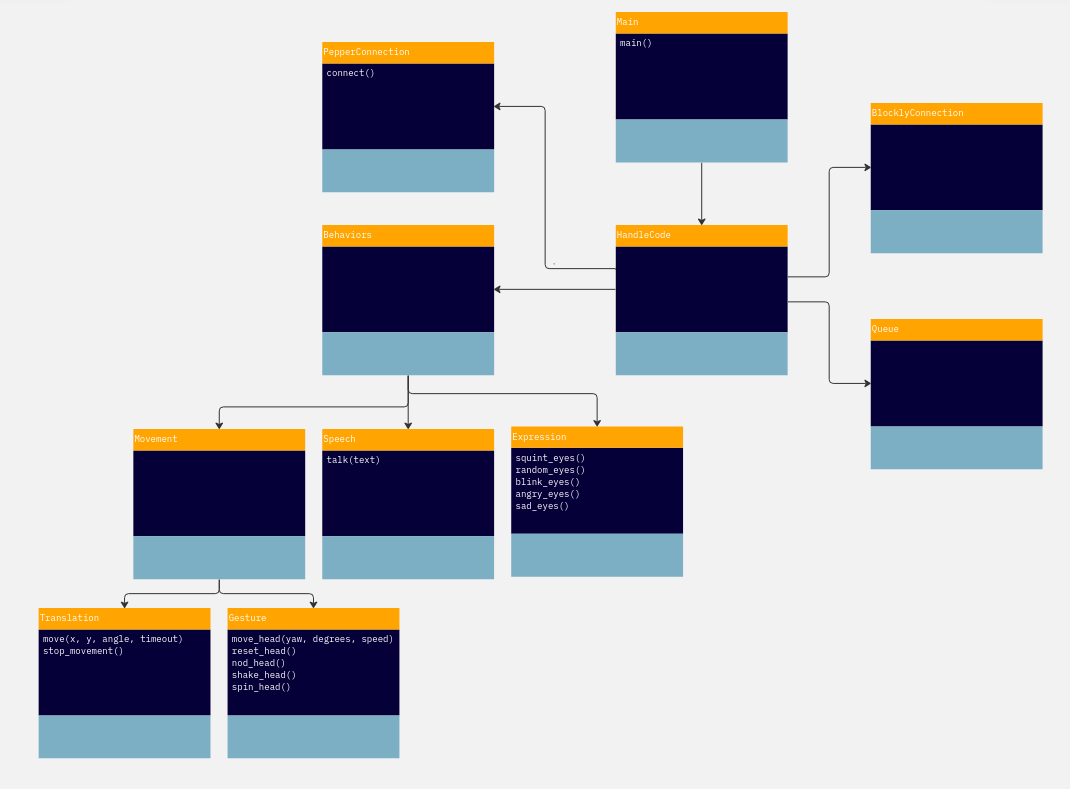

The Pepper communication group has set up an UML schema for the application. They have also started working on Pepper behavior, such as text-to-speech, changing the leds in the eyes, rotating the head, e.t.c.

Web interaction

Initially the group had issues with connecting to and interacting with Peppar, this resulted in a slow start. But with the help of the Rock-paper-scissors group effort to set up a dev container, by the end of the sprint the group got started with Pepper.

Initially the plan was to understand how the speech recognition could be used in order to fetch data from a given site. This was soon postponed as it made more sense to focus on working to understand and build upon a pre-existing feature that simply fetched two sentences from wiki.

It turned out that the pre-existing feature relied heavily on a library that parses data from Wikipedia. This makes the further development of this feature rather limited. Due to the slow start not much has been produced yet. Most of the work will be moved to sprint 1.

Rock-paper-scissors

A development environment was created utilizing the VS Code dev containers. This will hopefully enable us to develop more easily under the same conditions and environment.

With some inspiration from the pepper-controller project, we started experimenting and discovering the different NOAqi-services available on pepper and how to use some of them. More specifically the ALmotion and Joint control API.

While reading the technical specifications for the Pepper robot, it was discovered that its hands is limited to be either open or closed and the control of individual fingers is not possible. This will be one of the big issues for us to solve in the upcoming sprint.

Image recognition

During the initial sprint we have been able to find two open source data sets, both for rock-paper-scissors. However one of these is under a “Attribution-ShareAlike” license while the other is under a “MIT” license. Since the Attribution-ShareAlike license impose the use of itself to allow for using the material we chose to work with the data set that is under a MIT license. If we need more training data at a later stage of our project we may include the second set, however this depends on stake holders and other project groups as well.

The sprint did not go entirely smoothly, we had some issues with scheduling and colliding commitments. In order to alleviate this going into sprint 1 we decided to commit to being on site between 13 and 17 every weekday instead of the previous 8 to 12. We also chose to rechedule our sprint deadlines for wednesdays instead of mondays. As a result of this most of the planed work for sprint 0 was moved to the sprint 1 backlog.